In my post Use Google Search Suggestions for Keywords Research I cover why the paid keyword search tools are not very effective for building a keyword list. A better route is to use Google's search suggestions.

You can do this manually by going to Google, typing in a search and waiting for the 10 suggested keywords to drop down. Although there is not any easy way to copy these suggestions for keyword research. That is where the Node.js library Puppeteer comes in handy.

I made this quick video to help illustrate how to build this keyword bot.

Build a Keyword Scraper with Node.js and Puppeteer

We can build a small scraper with Puppeteer that will go do the search for us and grab the suggested keywords. Then we are going to output them in the terminal in the JSON formate.

1.Setup a Node.js Project

You will need to have node.js installed and running on your computer for this project.

First create your project folder and open the terminal in that folder. Then in terminal run npm init or npm init -y to except the default options.

Now that we have Node.js setup we can run npm install puppeteer in the terminal from our project folder. This is the only dependence we will need.

2.Quick Console.log Test

Now we can create index.js in the project folder and we are ready to start building.

In index.js add console.log("Working"); to do quick console log and see check that our application is running.

In the terminal type node index.js to run the application, and in your terminal we should see "Working".

3.Setup Puppeteer

From here on we will be working in index.js. First we need require Puppeteer to get access to the library. Then we wrap our code in an async await functions so each step will wait for the previous one to finish running.

First we are launching Puppeteer with a const of browser, then adding that launch to a new page with a const of page. Now Puppeteer is ready to go to a URL. Then we close the browser and end the async function.

const puppeteer = require('puppeteer');

async function asyncCall() {

// open a new browser

const browser = await puppeteer.launch();

// create a new page

const page = await browser.newPage();

// navigate to google

await page.goto('https://www.google.com');

await browser.close();

}

asyncCall();5.Search for Keywords of a Topic

Now that we are at the URL page, Puppeteer needs to know the the search form input name attribute is equal to. Using Chrome developer tools we can see that the search form input attribute name is "q". By using the "*" we will be match any element that has a name attribute that equals "q".

Now we tell Puppeteer to type our keyword topic in the search form. I used "sail boats". Then add a delay of about 500 milliseconds to appear as a real user typing a search.

For the search query we want to start with sail boats "a" and work through the alphabet and go to "z". This will cover all the topical keywords about sail boats.

// type slowly and parse the keyword

await page.type("*[name='q']", "sail boats a", { delay: 500});

// go to ul class listbox

await page.waitForSelector("ul[role='listbox']");Then we wait for the unordered list HTML tag with the class of "listbox" to show.

6.Extract the Keywords

Here is the fun part! I used an evaluate method with an arrow function that will allow us to evaluate the HTML element and return the results.

Now we can use a query selector to select the list items with the class of "wM6W7d" that is currently being used.

Then we use the Object.value method that will give us the array of given object's. So if we console.log just the Object.value of listbox we will see an array of numbers 0 through 9.

Now we can use the map method to create a new array based on the number of object's. Then with the innerText property we get the human readable text for each list it.

// extracting keywords from ul li span

const search = await page.evaluate(() => {

// count over the li's starting with 0

let listBox = document.body.querySelectorAll("ul[role='listbox'] li .wM6W7d");

// loop over each li store the results as x

let item = Object.values(listBox).map(x => {

return {

keyword: x.querySelector('span').innerText,

}

});

return item;

});

// logging results

console.log(search);We return the item and can then get the result from the search const.

Putting it all together for the full code.

const puppeteer = require('puppeteer');

const keyword = "boats"

async function asyncCall() {

// open a new browser

const browser = await puppeteer.launch();

// create a new page

const page = await browser.newPage();

// navigate to google

await page.goto('https://www.google.com');

// type slowly and parse the keyword

await page.type("*[name='q']", "sail boats b", { delay: 500});

// go to ul class listbox

await page.waitForSelector("ul[role='listbox']");

// extracting keywords from ul li span

const search = await page.evaluate(() => {

// count over the li's starting with 0

let listBox = document.body.querySelectorAll("ul[role='listbox'] li .wM6W7d");

// loop over each li store the results as x

let item = Object.values(listBox).map(x => {

return {

keyword: x.querySelector('span').innerText,

}

});

return item;

});

// logging results

console.log(search);

await browser.close();

}

asyncCall();

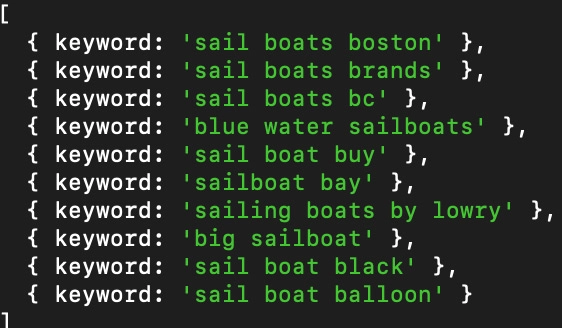

Now if we run node index.js in the terminal we should see the console.log of the function "search". For each search we get the top 10 keywords and phases.

We can go through the alphabet and quickly collect hundreds of keywords for a topic. You can follow these suggestions like breadcrumbs to find valuable keywords that are being overlooked by big data tools like Semrush, Moz and Ahrefs.

Conclusion

This is a powerful tool for quickly seeing live keyword results. We can collect the relevant keywords that are the currently trending with even opening a browser.